Table of Contents

- What Is the Answer to “Which AI Tool Is Developed by OpenAI for Generating Images? Midjourney DALL-E Stable Diffusion Dream”?

- Mistake 1: Vague Prompts in DALL-E (OpenAI’s Image AI Tool)

- Mistake 2: Ignoring Aspect Ratios in OpenAI’s DALL-E

- Mistake 3: Overlooking Professional Photo Adjustments – and why it matters

- Mistake 4: Not applying Psychological Triggers (I know, I know)

- Mistake 5: Generating Single Images Instead of Batches

- Mistake 6: Treating AI as a “One-Click” Solution

- Mistake 7: Ignoring the Competition’s Quality

- How to Get Started with Better DALL-E Images Today (I know, I know)

- Listen to This Article

All right, so today we’re going over something that’s been on my mind, a lot lately, which ai tool is developed by openai for generating images? midjourney dall-e stable diffusion dream. We’re looking at the massive generative AI market—which is projected to hit a staggering $1,022.41 billion by 2032—and I’m seeing a lot of people just spinning their wheels. You’ve got this really good engine under the hood of your browser, but if you don’t know how to tune it, you’re just burning gas.I was talking to Jamie Chen, our content writer here. We were laughing about how many people still treat these tools like magic 8-balls instead of precision instruments. With OpenAI expected to generate $20 billion in revenue in 2025 alone, it’s clear everyone is buying in. That’s it. It’s like hot-reloading — content updates in real-time. But honestly are they using it right? Honestly, mostly no.

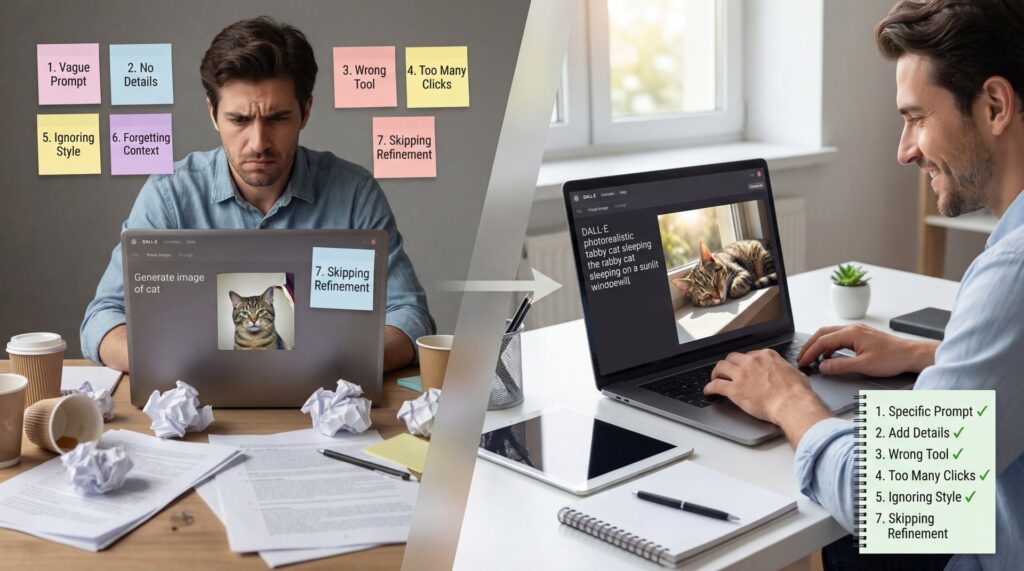

So, let’s go under the hood. I’m gonna walk you through the seven biggest mistakes I see people make with OpenAI’s image tools and, more importantly, how to fix them so you can get back on the road with better visuals—especially if you’re wondering which AI tool is developed by OpenAI for generating images: Midjourney, DALL-E, Stable Diffusion, or Dream. Seriously.

What Is the Answer to “Which AI Tool Is Developed by OpenAI for Generating Images? Midjourney DALL-E Stable Diffusion Dream”?

First off, let’s clear up the confusion. I get asked this exact question all the time: which ai tool is developed by openai for generating images? midjourney dall-e stable diffusion dream?

It’s a bit of a mouthful, but here’s the thing when people ask which AI tool is developed by OpenAI for generating images—Midjourney, DALL-E, Stable Diffusion, or Dream: the answer is DALL-E. Specifically, right now in 2026, we’re mostly dealing with DALL-E 3 integrated directly into ChatGPT.

Midjourney is its own beast. Stable Diffusion is the open-source one you run on your own rig if you’re tech-savvy. Dream usually refers to Wombo. But when you ask which AI tool is developed by OpenAI for generating images—Midjourney, DALL-E, Stable Diffusion, or Dream—OpenAI? That’s DALL-E. And knowing which tool you’re using is half the battle because DALL-E “thinks” differently than the others.

I mention that revenue number because it shows just how many people are using this system—and many still ask which AI tool is developed by OpenAI for generating images: Midjourney, DALL-E, Stable Diffusion, or Dream. If you wanna stand out, you can’t just do what the average user is doing. You have to be smarter.

Mistake 1: Vague Prompts in DALL-E (OpenAI’s Image AI Tool)

Now, here’s the thing about DALL-E inside ChatGPT—the answer to which AI tool is developed by OpenAI for generating images: Midjourney, DALL-E, Stable Diffusion, or Dream. It’s smart, but it’s not a mind reader. The biggest mistake I see? People typing “dog on a skateboard” and expecting a masterpiece.

I’ve found that DALL-E thrives on context. It wants to know the why and the where. If you just give it, a subject, it fills in the blanks with generic, stock-photo-looking junk. And nobody clicks on generic.

How to Fix Generic DALL-E Images from OpenAI’s AI Tool

You want to be specific. Instead of “dog on a skateboard,” try “A gritty, low-angle shot of a bulldog skating down a neon-lit Tokyo street at night, 35mm lens, cinematic lighting.” see the difference? We went from a cartoon to a scene. I personally prefer to give ChatGPT a “role” before I even ask for the image. I tell it, “You are an expert cinematographer.” It changes how the AI interprets your requests completely.

💡 Quick Tip: Which AI Tool by OpenAI? DALL-E

Don’t just ask for an image; describe the camera lens. Adding terms like “wide-angle,” “macro lens,” or “GoPro footage” completely changes the vibe. Trust me on this. If you’re struggling to get the right look for your project, check out our workflow guides to see how pros structure their requests.

Mistake 2: Ignoring Aspect Ratios in OpenAI’s DALL-E

So, let’s cover aspect ratios. This one drives me nuts. By default, DALL-E often wants to give you a square (1:1) image. That’s great for Instagram in 2015, but for a YouTube thumbnail? It’s useless.

If you take a square image & try to crop it to 16:9 later, you loose the top and bottom of your composition. You chop off heads. You loose the text. It’s a mess.

The Fix for OpenAI’s DALL-E Image AI Tool Is Simple

You have to tell the tool what you want upfront. In ChatGPT, you can literally just say “Aspect ratio 16:9” or “Wide format.” I was surprised by how many creators skip this step. They generate a square, then stretch it to fit a thumbnail and it looks warped. If you’re making content for YouTube or social media, the aspect ratio is the frame of your car. You can’t change the frame after the car is built without ruining the whole thing.

Mistake 3: Overlooking Professional Photo Adjustments – and why it matters

(In my humble opinion…)

Now, if you want to really get professional results, you have to speak the language of photography. Real talk. A lot of users treat DALL-E like a clip-art generator. But here’s what you want to do. You need to define the lighting. Should it be “golden hour”? Maybe “studio lighting”? Or perhaps “harsh fluorescent”?

Why Lighting Matters (yes, really)

I think lighting is the single biggest factor in making an AI image look real. Without lighting instructions, the AI creates this flat, evenly lit look that screams “fake.” Also, consider the film stock. It sounds nerdy, but adding “Kodak Portra 400” or “Fujifilm aesthetic” gives the image a grain and color grade that feels organic.

Pro Tip: If your image looks too “plastic,” add 😅 the words “imperfect skin texture,” “film grain,” or “motion blur” to your prompt. Perfection looks fake; flaws look real.

Mistake 4: Not applying Psychological Triggers (I know, I know)

(I could be off base here.)

Let’s be real for a second. We’re making images to get people to stop scrolling. If your image is pretty but boring, it fails. I’ve seen so many people generate “nice” images that get zero clicks. Why? No emotion.

The Face Focus Factor

According to our own internal data here at Banana Thumbnail, thumbnails with a clear, emotive face (what we call “Face Focus”, generate a 38% higher clicks (CTR). If you aren’t telling DALL-E to make the subject “express shock,” “look skeptical,” or “laugh hysterically,” you are leaving money on the table.

🤔 Did You Know? – and why it matters

Faces drive clicks. Our research shows that Face Focus thumbnails generate 38% higher CTR than those without. Every time. If you want to see how this works in practice, take a look at our features page to see how we put first facial expressions in design.

We actually covered this concept of emotional hooks in our article on 9 AI Thumbnail Mistakes Killing Your Views. It’s worth a read if you want to go deeper into the psychology of the click.

Mistake 5: Generating Single Images Instead of Batches

So from there, you need to know about iteration. A rookie mistake is asking for one image, looking at it, saying “meh,” and giving up, so when I’m working on a project, I never ask for just one. I ask for four variations.

The “Batch” Workflow

In ChatGPT with DALL-E 3, it can be lazy and only give you one. You have to push it. I usually say, “Generate 4 distinct variations of this concept.” Why? Because the AI is rolling dice. Sometimes you get snake eyes, sometimes you get boxcars. If you only roll once, you’re betting everything on a single toss.

Mistake 6: Treating AI as a “One-Click” Solution

Now, this is a big one. People think the AI output is the final product. Honestly? It’s almost never the final product. The output you get is actually the raw material. If you look at the generative AI market growth, it’s exploding because professionals are using these tools to start their work, not finish it.

The Text Problem

DALL-E 3 is way better at text than it used to be, but it still messes up spellings or puts text in wierd spots. I prefer to generate my images without text, and then add the text later in a dedicated editor. Trying to get the AI to do the typography perfectly is like trying to get a mechanic to paint a portrait. It’s not what the tool is best at. If you’re struggling with getting, the AI to understand exactly what you want text-wise, check out our guide on 5 ChatGPT Image Prompt Mistakes Killing Your CTR.

Mistake 7: Ignoring the Competition’s Quality

Finally, let’s talk about the market. With ChatGPT facing pressure from Google and other rivals closing the gap, the quality bar is raising every single week. Worth noting. What looked impressive in 2024 looks cheap in 2026. If you’re using the same prompts you used two years ago, your images look dated.

Stay Current

I make it a habit to test new style keywords every Monday. Right now, “hyper-surrealism” and “mixed media collage” are trending. Six months ago, it was “cyberpunk.” You have to keep your ear to the ground. If you don’t, your content starts to look like “AI slop”, that generic, shiny, smooth look that everyone ignores.

**Analyze**

Look at the top-performing images in your niche this week. What lighting are they using?

**Reverse Engineer**

Describe that style to ChatGPT and ask it to generate a prompt for you.

**Iterate**

Generate 4 variations, pick the best, and manually edit the colors to pop.

The market is growing at roughly 37% annually. That means the tools are getting better, but so are your competitors. You can’t afford to be lazy with your inputs.

How to Get Started with Better DALL-E Images Today (I know, I know)

So, let’s wrap this up with some practical advice. If you’re sitting there thinking, “Okay, I’ve been making these mistakes, now what?”. don’t sweat it. We’ve all been there. The first thing you want to do is stop treating the prompt box like a Google search bar. Treat it like a conversation with a creative partner.

Start by defining your aspect ratio. Then, set the scene with lighting and camera lens details. Ask for emotion. And for the love of gears and gaskets, don’t use the very first image it spits out. I’ve found that just taking an extra 30 seconds to polish your prompt saves you 20 minutes of trying to fix a bad image later.

📋 Quick Reference – and why it matters

Before you hit “Generate,” check this list:

1. Did I set the aspect ratio (16:9)?

2. Did I specify lighting (e.g., “cinematic,” “soft”)?

3. Did I ask for a specific emotion?

If you want to speed this up, our pricing packages include tools that automate this optimization for you.

Thanks for reading, guys. If you think this was helpful, be sure to check out the rest of the blog. Till next time.

Frequently Asked Questions

What are the most common mistakes users make with OpenAI’s AI Image Tools?

The most common mistakes are using vague prompts without context, ignoring aspect ratios (resulting in square images), and failing to specify lighting or camera styles, which leads to a generic “AI look.”

How has the adoption of OpenAI’s AI Image Tools changed over the past year?

Adoption has surged with OpenAI projected to hit $20 billion in revenue in 2025, moving from experimental grabbed by tech enthusiasts to a standard daily tool for marketing professionals and content creators.

What are the key challenges professionals face when using OpenAI’s AI Image Tools?

Professionals struggle most with consistency in character faces across multiple images, getting accurate text rendering within the image. Removing the “artificial” sheen that DALL-E often applies by default.

What are the most common mistakes users make with OpenAI’s AI Image Tools?

The most common mistakes are using vague prompts without context, ignoring aspect ratios (resulting in square images), and failing to specify lighting or camera styles, which leads to a generic “AI look.”

How has the adoption of OpenAI’s AI Image Tools changed over the past year?

Adoption has surged with OpenAI projected to hit $20 billion in revenue in 2025, moving from experimental grabbed by tech enthusiasts to a standard daily tool for marketing professionals and content creators.

What are the key challenges professionals face when using OpenAI’s AI Image Tools?

Professionals struggle most with consistency in character faces across multiple images, getting accurate text rendering within the image. Removing the “artificial” sheen that DALL-E often applies by default.

Related Content

For more on this topic, check out: mistakes

Listen to This Article