Table of Contents

- What Is AI Age Progression and Regression, Really?

- The Models Behind the Magic: From Age Estimation to Facial Age Manipulation

- How Accurate Is It in 2025? The Numbers You Can Actually Trust

- What Should Creators Do to Get Consistent, Realistic Age Transformations?

- For Pros: Forensic, Clinical, and Compliance Use Cases in 2025

People often think AI age progression and regression is just about adding wrinkles or smoothing skin, but the reality is more complex. In 2025, these tools preserve your unique identity while adjusting skin texture, bone structure hints, and hair changes to show you at different life stages. Whether you’re curious about seeing yourself as a teenager or in your eighties, or creating characters that span decades, this technology has moved beyond simple filters into research, forensics and healthcare applications.

This post breaks down how it works without excessive technical jargon, shares the latest 2025 data and explains practical applications for personal interest, marketing projects, or medical processes. We’ll cover why image resolution matters, the types of models involved, and how biological age prediction connects to visual transformations.

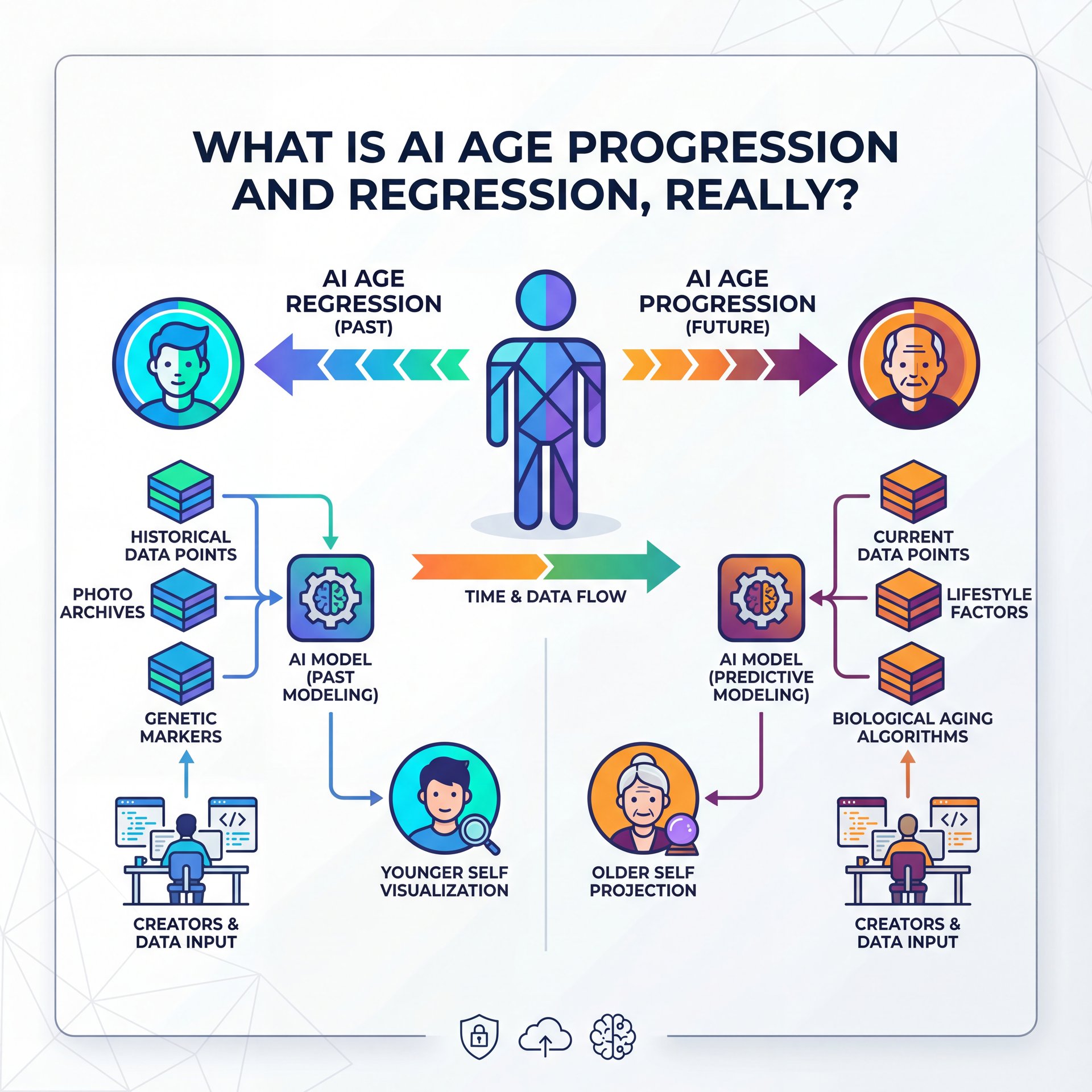

What Is AI Age Progression and Regression, Really?

AI age progression and regression involves two distinct tasks: age estimation and age transformation. Age estimation determines someone’s age from a photo or biometric data, while age transformation creates a new image showing the same person at a different age. Progression adds years, regression subtracts them, but both rely on initial estimation to guide changes.

The process starts with face detection and alignment, ensuring key points like eyes, nose, and mouth are correctly positioned. The system then extracts core identity features to ensure results still resemble you. A generative model, typically a diffusion model in 2025, adjusts age-specific traits based 💯 on learned patterns—crow’s feet formation, skin texture variations or jawline definition. The challenge lies in balancing age adjustments without losing recognizable identity, which separates convincing results from the uncanny valley outcomes we’ve seen in earlier versions.

Under the hood, the workflow looks like this: detect and align the face so anchor points are perfectly positioned, estimate unique identity features to preserve recognition, then apply a generative model that manipulates specific facial age attributes. The model learns patterns like wrinkle formation, skin gradient changes, and volume shifts in cheeks and jawlines. Preserving identity while editing these age cues is the core technical challenge.

For casual users, this explains why clean, frontal photos with good lighting outperform group shots or odd-angle selfies. For creators, character continuity improves when camera position and lens choice stay consistent across reference shots. For professionals, this is where model calibration, age distribution in training data, and error quantification become critical.

The Models Behind the Magic: From Age Estimation to Facial Age Manipulation

Better age estimation directly enables better transformation. In 2025, age estimation models trained on medical and face datasets build on architectures like EfficientNet or DenseNet for compact, high-accuracy perception, plus detection models such as YOLOv5 and U-Net for isolating faces or specific anatomical structures. In medical imaging contexts like dental or bone analysis, mean absolute error (MAE) reaches as low as 0.56–3.12 years for adolescents and adults, with errors rising at extremes—especially beyond 90 years, where physiological variations become less predictable.

Popular face-focused frameworks show different performance profiles. DeepFace yields, you know, an average error of 11.28 years while InsightFace achieves 8.35 years at 224×224 pixel resolution. These numbers highlight why model selection and input quality matter significantly for practical applications.

⚠️ Common Mistake: “Bigger Image = Better Result”

Uploading 6K images won’t guarantee better age edits. Most systems are tuned for 224–512 px inputs; feeding huge files can blur fine age cues after internal downscaling and upsampling. Sharper results often come from clean 512 px crops rather than ultra-high-res uploads.

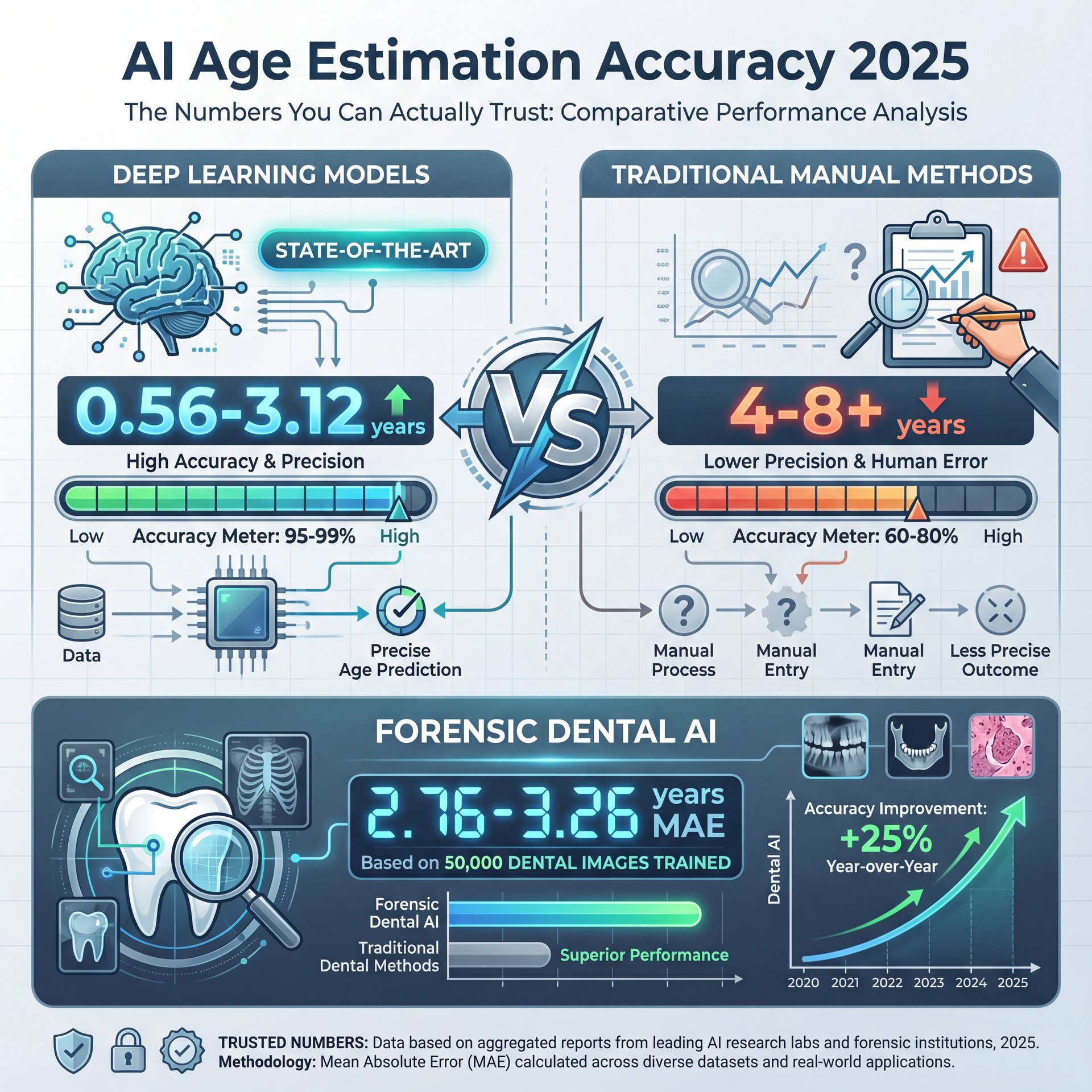

How Accurate Is It in 2025? The Numbers You Can Actually Trust

“Looks realistic” is not a metric. Across medical-image-trained systems including dental X-rays and bone images, state-of-the-art deep learning models reach MAEs in the 0.56–3.12 year range for adolescents and adults, substantially outperforming traditional manual estimation methods. In forensic settings using only dental data, convolutional neural networks trained on 50,000 dental images achieve 2.76–3.26 years MAE—far better than manual charts or visual grading.

The question of whether AI-generated faces are “obviously fake” pretty much is shifting rapidly. In a 2025 study, people recognized AI-generated age-progressed faces with 76% accuracy compared to 74% for authentic photos. Humans are essentially performing at chance level when deciding which is real versus AI-generated, demonstrating how believable these synthetic age edits have become.

Biological age prediction represents another parallel development. Models trained on photoplethysmography (PPG) waveforms—light-based readings from smartwatches and wearables—show Pearson correlation of 0.49–0.54 with chronological age, with MAEs around 7.57–9.69 years across large clinical datasets. While not perfect, this accuracy suffices for use as digital biomarkers in cardiovascular risk assessment within massive datasets like UK Biobank, and for flagging age-acceleration trends warranting closer medical examination.

Cross-modality age estimation is emerging as a significant advancement in 2025, with AI trained to manipulate age across images, voice recordings, and biometric data like PPG waveforms. These systems balance multiple input types for more robust validation and identity control compared to single-modality approaches.

🤔 Did You Know? Accuracy Loves Clean Inputs

Age estimation error often drops when you align, crop, and normalize images before analysis. Tools supporting face alignment and color normalization typically yield tighter age predictions and smoother transformations.

What Should Creators Do to Get Consistent, Realistic Age Transformations?

For creators building characters that evolve across a series, consistent preparation determines believability. Maintaining stable lenses, distances, lighting, and neutral expressions gives models a reliable foundation. Keep a small set of “anchor” photos for each character and age bracket you’re targeting—teen, 30s, 50s, 70s—then let the model interpolate between those anchors rather than jumping from a single reference.

Image resolution and face alignment significantly control output quality. The optimal range is typically clean 224–512 px crops, taken straight-on or at three-quarter angle, without harsh shadows. This mirrors the thinking behind effective thumbnail creation: clean subject separation, clear focus, and consistent style.

Prep Your Inputs

Shoot 2–3 aligned reference shots per age bracket under similar lighting; crop to 224–512 px and keep expressions neutral so the model can read micro-features.

Choose the Model Path

For portraits, use a diffusion-based age transformer with identity embedding; for sequences, lock a seed so shots stay consistent across frames.

Validate and Calibrate

Compare outputs against anchors; if faces drift, rerun with stronger identity weight or add a mid-age checkpoint to steer the model.

For video-based character arcs, batch processing with a locked seed maintains continuity across a series. Keep metadata consistent—if your target “age 60” shot uses warm grading and soft contrast, carry that style into other outputs for coherent storytelling.

🔧 Tool Recommendation: Video + Face Consistency

For character arcs across video, pick tools that support identity-locked diffusion and batch seeds. Try workflows designed for face alignment and series consistency via Banana Thumbnail Workflows or render sequences through Video Generation to keep styles stable.

For Pros: Forensic, Clinical, and Compliance Use Cases in 2025

In forensics, you know, and healthcare, traditional age estimation methods—manual dental charts, radiographic heuristics, or visual grading—are being outperformed by CNNs trained on large, curated datasets. In dental forensics, models trained on 50,000 images report MAEs around 2.76–3.26 years, and in broader adolescent/adult cohorts we see 0.56–3.12 years. However, extreme ages exceeding 90, poorly imaged samples and underrepresented populations still increase error. The solution involves more balanced data, calibrated outputs per demographic group, and transparent error reporting.

Biological age predictors from faces, PPG or multimodal stacks function as digital biomarkers rather than definitive clinical diagnoses. They serve as early-warning sensors. PPG-based models reach Pearson correlations of 0.49–0.54 with chronological age and MAEs under 10 years—sufficient to detect age-acceleration patterns correlating with cardiovascular risk and mortality signals in large populations. When evaluating these systems, separate “vanity metrics” like attractive aged photos from clinically relevant measures like MAE, proper calibration curves, and subgroup performance.

(Long story short.)

For deployment, set realistic confidence thresholds, require human-in-the-loop review for edge cases, and log subgroup results to monitor drift. In regulated spaces, maintain clear separation between “entertainment-grade” face transformation and “evidence-grade” estimation. Treat age transformations as illustrative, and support any decision-making with audited estimators and documented error ranges.

Frequently Asked Questions

How does AI handle age progression and regression differently?

Progression adds age-related features like wrinkles, hair changes and facial volume shifts while preserving identity; regression removes those cues to simulate younger appearance, often requiring stronger identity constraints.

What are the most accurate AI models for age estimation?

In 2025, medical-image-trained CNNs and EfficientNet/DenseNet variants show MAEs as low as 0.56–3.12 years for adolescents/adults. Popular face frameworks like DeepFace (11.28 years MAE) and InsightFace (8.35 years MAE) vary more widely.

How does image resolution impact age estimation accuracy?

Most pipelines perform best with aligned 224–512 px crops; too low resolution hides fine age cues, too high can introduce artifacts after internal resizing.

What are the limitations of AI in age estimation?

Accuracy drops at extreme ages and in underrepresented groups. Outputs can reflect dataset biases without careful calibration and auditing.