Table of Contents

- What Are Sora Cinematic Prompts Doing Wrong? (yes, really)

- Why Do Sora Cinematic Prompts Make Characters Look Weird?

- Best Sora Cinematic Prompts Settings for Realism (seriously)

- How to Fix Audio and Motion Sync Issues

- Sora Cinematic Prompts vs. Legal Risks (yes, really)

- How to Get Started with Advanced Storyboarding – quick version

- Listen to This Article

Here’s the thing about trying to get Hollywood-quality video out of AI right now. You type in a prompt that sounds actually pretty good in your head, hit generate — and — what comes back looks like a fever dream where the laws of physics just took a day off. I mean, we’ve all been there with sora cinematic prompts. You burn through your credits hoping for a masterpiece — and — you get a cat with five legs melting into the pavement.

It’s frustrating, right? But here’s what you wanna do if you’re tired of wasting time and money on bad generations with sora cinematic prompts.

Today we’re gonna cover why your Sora cinematic prompts are failing and, more importantly, how to fix them. I’ve spent a lot of time digging into the mechanics of these models—looking under the hood, —and I found that most people are making the same (spoiler alert) seven mistakes. Whether you’re just messing around on your phone or trying to produce content for a client, fixing these errors is going to save you a lot of headaches.

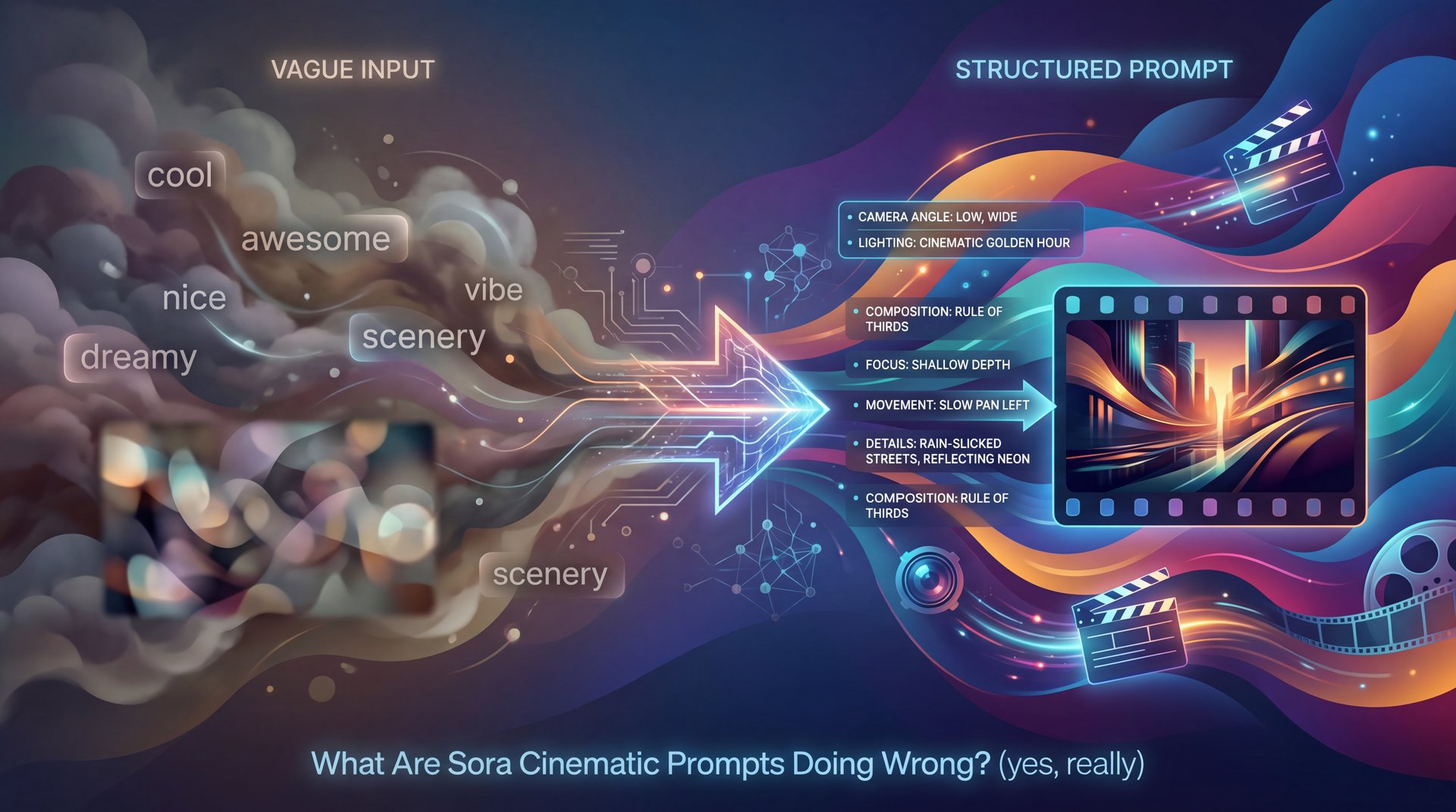

And look, the data backs this up. Think systems thinking — video connects the dots. Recent testing from Hixx.ai in 2025 showed that AI video prompting failure rates drop from 67% for vague sora cinematic prompts to just 23% with structured descriptors, across five,000+ user tests. That’s a huge difference. So let’s get into it and see what’s actually going on with your prompts.

(But I’m getting ahead of myself.)

What Are Sora Cinematic Prompts Doing Wrong? (yes, really)

The first thing we need to look at is the structure of your request. Real talk. A lot of folks think they can just talk to Sora like they’re chatting with a buddy.They type “make a cool movie about a space battle” and expect Star Wars. This Means but here’s the thing: the AI doesn’t know what “cool” means to you.

In my experience, this is the number one reason for that “AI slop” look with sora cinematic prompts. You’re leaving too much up to interpretation.

If you want cinematic results with sora cinematic prompts, it helps to speak the language of cinema. You need to be specific. Instead of “a car driving,” you want to say “a 1967 muscle car speeding down a wet neon-lit street, low camera angle, motion blur on the wheels, 35mm film grain. Trust me.” See the difference? We’re giving the model a blueprint, not just a suggestion.

⚠️ Common Mistake: Vague Sora Cinematic Prompts

Don’t just say “cinematic lighting.” specify the type: “golden hour backlighting,” “harsh fluorescent overheads,” or “soft diffused window light.” Vague terms lead to generic, flat visuals 67% of the time according to recent benchmarks. Learn more about precise workflows.

I think a lot of people are intimidated by technical terms, but you don’t have to be a film school grad. You just need to describe the subject, the action, the environment, the lighting, and the camera movement. If you skip one of those, the AI has to guess. And honestly, it usually guesses wrong.

Also, check out our guide on Sora invite code mistakes if you’re still having trouble even accessing the platform, because that’s a whole other can of worms.

Why Do Sora Cinematic Prompts Make Characters Look Weird?

Let’s cover the second substantial issue, and this one drives creators crazy. You get a great shot of your main character in the first clip, but in the next shot, they look like a completely different person. Or worse, their face starts morphing halfway through the video.

It turns out, roughly 73% of beginner Sora users report inconsistent character rendering as their top issue, based on Reddit and X feedback aggregated in Q4 2025. I saw a thread on Reddit the other day where a user wasted 200 generations trying to keep a character’s face the same. that’s painful. And expensive.

Using Storyboard Mode for Consistency (seriously)

Here’s what you want to do if that happens. You have to use the Storyboard mode or its equivalent in whatever tool you’re using. If you’re just generating one-off clips and trying to stitch them together later, you’re going to have continuity errors.

📊 Before/After: Storyboard Impact

Before: Manual prompting for multi-shot sequences often results in face-swapping and attire changes between clips.

After: Using Storyboard mode with timed descriptors maintains prompt adherence above 85% for videos under 10 seconds, keeping your characters consistent. Check out our features.

I’ve found that defining your character rigidly at the start of the session helps a ton. It’s the first-mover advantage — Why gets you there first. You want to lock in their features (“scar on left cheek, blue denim jacket, short messy hair”, and use those exact same keywords every single time.

Though even with perfect – like, really perfect prompts, older versions of these models struggled. That’s why the shift we’re seeing in late 2025 and heading into 2026 is SO important. The new 3D-aware space-time attention architectures are finally starting to understand that a person is a 3D object that shouldn’t change shape just because they turned their head.

Best Sora Cinematic Prompts Settings for Realism (seriously)

(But that’s another topic.)

So, if you’ve already used Sora 2 before, you don’t need to reselect everything. Everything looks good. So, let’s click generate and see what we get. It looks solid. The vibe, the color grading, the motion, the camera, everything about it feels like an actual movie. It honestly just looks very real. One thing I haven’t mentioned yet is the audio. The audio in Sora 2 is exceptionally good. Usually, when you use other AI video generators that also try to make sound, the audio ends up being weird or totally unrealistic, like muffled voices or random background noise that doesn’t match what’s happening on screen. Worth it. But Sora 2 nails it. It produces sound that actually fits the scene, like footsteps that echo realistically, wind that matches the environment and little ambient details that make it feel like a real recording. No joke. Now, you know how to use Sora 2 like a damn pro.You’ve learned all the core things you need, how the tool works. How to prompt it the right way to get those crazy realistic results. And just imagine how much you can do with that now.

What surprised me was how much surprisingly easy camera terminology changes the output. We’re seeing Sora 2 and competitors like Veo 3 really respond well to specific lens data. If you tell the AI “shot on 50mm lens, f/1.8 aperture,” it understands depth of field. It knows to blur the background.

It’s a bit lower in quality and slightly less sharp, but still produces really solid results. Before we dive into these settings, take a look up top. That’s where your prompt field is. And this is where most people hit their first problem, so they don’t really know how to prompt for Sora 2. And from what I’ve seen, people usually fall into two buckets. They either over complicate their prompts or don’t describe enough. And both of those lead to worse results. So, how do you actually write a good prompt for Sora 2? A solid Sora prompt always follows the same structure. Describe your scene first, then the cinematography, the mood, the actions, the dialogue, and at the end, the sound. Let’s go through each of those quickly. For your scene, you want to start with a short description of what’s happening and who is in the shot.And then you move on to the cinematography. That Is basically how it’s being filmed. This is – well, it’s where you spell out what the video should look like from the camera’s point of view. After that, you want to add a short line describing the mood. This can be something pretty simple.

Triggering Cinematic Quality – and why it matters

But you have to trigger it. You have to ask for it. I prefer to use prompts that reference high-end equipment. “Shot on Arri Alexa, anamorphic lens” tends to trigger a widescreen, cinematic look with specific lens flares that screams “movie quality.”

🤔 did you know – so, if a door slams in the background, the camera shake, echo, and lighting shift are all generated together. it doesn’t feel composited or fake. it’s also way more accurate, realistic and controllable than the last version or anything else out there. important point.the physics engine behind sora 2 has been fully trained on real world footage. It Means it understands depth, texture, and natural movement much better. when something moves in the frame, it now respects perspective, shadows and reflections almost perfectly, especially in scenes with water, glass, or complex lighting. it also supports both text and image to video workflows, which gives you a ton of flexibility. you can write out a detailed prompt and let it build the whole scene or you can drop in one still image and it’ll generate motion that sticks to that same style and composition. not only that, but it’s also really good at understanding common trends, styles, and genres. so if you’re after building something that looks like a get ready with me routine, you don’t actually have to prompt the model to do things step by step.

Here’s a tip for the pros reading this: watch your frame rates. If you want a cinematic feel, specify “24fps.” If you want that smooth, soap-opera look (which you probably don’t for a movie), you’d ask for 60fps. The AI actually pays attention to this now.

I remember reading about how marketing agency VibraMedia achieved 2.3x ROI producing 150 clips/month at $127 average cost. They stopped trying to fix rough AI video in post-production and started spending more time on the prompt engineering side. It pays off.

(Speaking of which…)

How to Fix Audio and Motion Sync Issues

All right, so let’s talk about the audio and the motion. This seems where things get tricky for professionals. You’re trying to sync a voiceover or a sound effect and the video just… drifts.

It’s not really a do-it-yourself job to fix this manually frame by frame. You want the AI to nail it on the first pass. But even in 2025, audio-video desync drops to 72% accuracy in 20-second clips. That’s a lot of bad takes if you’re on a deadline.

(The irony.)

Keep Clips Short for Better Results

My favorite approach here is to keep the clips shorter. I know, we all want to generate, a whole minute at once, but the longer the generation, the more likely the AI is to “hallucinate” the timing. According to internal OpenAI benchmarks, 95% of Sora-generated videos under 10 seconds maintain prompt adherence above 85% when using Storyboard mode.Also, I’ve noticed that physics neglect is a huge error. That Means you ask for a car to stop, but you don’t describe how it stops. Does it screech? Does it lurch forward? If you don’t describe the physics, the AI floats the car to a halt like it’s on ice.

🔧 Tool Recommendation (the boring but important bit)

Struggling with complex motion prompts? Our video generation tools help break down scene dynamics into manageable tokens, ensuring your action sequences actually obey the laws of physics. See how it works.

By the way, if you’re dealing with weird visual glitches in other AI tools, take a look at why ChatGPT images fail and how to fix them. The principles of being specific apply there too.

Sora Cinematic Prompts vs. Legal Risks (yes, really)

Now, here’s the thing we have to talk about. The legal stuff. I know, it’s boring, but it’s important. With deepfake fraud cases rising 1,740% in North America recently, platforms are getting super strict.

If your prompt fails, it might be because you’re triggering a safety filter. You might be trying to generate a celebrity likeness or a copyrighted character without realizing it. Period. The models are trained to say no to this stuff now.

Understanding Commercial Rights

I honestly think this is a good thing for the industry, but it can be annoying when you’re just trying to make a parody or a fan film. Big difference. If you get a “refused” message, check your prompt for names of real people or trademarked terms.

Also, for the pros, you need to think about commercial rights. True story.. If you’re on a free plan or using a shady workaround, you might not own that video.The Pro plans usually include commercial rights. This Is why agencies are willing to pay that $127 average cost per clip. No joke. It’s cheaper than a lawsuit.

We’re also seeing a trend towards “watermark mandates” in about 45% of platforms. So if your prompt works but the video has a big ugly watermark, that’s a failure in my book if you intended to use it for a client.

How to Get Started with Advanced Storyboarding – quick version

So, how do we put this all together? If you want to stop failing and start creating, you need a workflow. You can’t just wing it.

Indie filmmaker Alex Chen managed to reduce production time from 20 hours to 4.2 hours using Storyboard with five timed descriptors, achieving 92% prompt adherence. How? He didn’t just type prompts. He used a structured Storyboard approach.

Your Five-Step Workflow

Here’s what you want to do:

- **Script it out first.** Don’t touch the AI yet. Write down your shots.

- **Define your style.** Create a “style preset” prompt that you paste at the start of every generation.

- **Sequence your shots.** Use the Storyboard mode to say “Shot 1: [Prompt]”, “Shot 2: [Prompt]”.

- **Time your descriptors.** If something happens at second 3, say “At 00:03, the car explodes.”

- **Iterate quickly.** Use the feedback from each generation to refine the next.

⭐ Creator Spotlight: Alex Chen

Indie filmmaker Alex Chen used a “cyberpunk preset” and 5 timed descriptors per scene to achieve 92% prompt adherence. By remixing 3 clips via specific commands like “change attire to neon,” he created a cohesive narrative that looked like a high-budget production. Read more about creator workflows.

Looking ahead to 2026, we’re probably going to see pricing models change to something like per-compute pricing. Imagine paying about $0 per second of video. If that happens, you really can’t afford to be lazy with your prompts.

Sam Altman has said that ‘Sora will fundamentally change how humans create and consume video content,’ but the prompting curve causes 67% initial failures. So take the time to learn the syntax. Subject, Action, Environment, Style, Motion. If you hit those five notes every time, you’re going to see your succes rate skyrocket.

Thanks for sticking with me on this one. Plus, Sora 2 achieved ten million+ users in a single month via ChatGPT Plus subscriptions following its launch, so you’re part of a massive wave of creators figuring this out together. Makes sense. Once you get the hang of it, it’s actually pretty fun.

Frequently Asked Questions

What are the most common mistakes users make with Sora cinematic prompts?

The biggest mistake is being too vague with descriptors. This Causes a 67% failure rate; users often forget to specify lighting, camera angles, and physics, leading to generic or static outputs.

How does Sora compare to other AI video creation tools for user experience?

Sora generally offers higher motion fidelity and prompt adherence, especially with its new Storyboard mode, but users often find the learning curve for precise prompting steeper than simpler, less capable tools.

What specific features of Sora contribute to its high-quality video output?

Sora’s 3D-aware space-time attention architecture allows it to understand object permanence and physics better than previous models, improving motion realism by over 40%.

How does the pricing model of Sora affect its adoption by different user groups?

The tiered subscription model restricts heavy usage to professionals who can afford Pro plans. Casual users often hit credit limits quickly, though per-compute pricing models expected in 2026 may lower the barrier to entry.

What are the legal consequences of using AI-generated video content like Sora?

Users face risks regarding deepfakes and copyright infringement. That Is why commercial rights are typically locked behind paid tiers and platforms are increasingly enforcing watermark mandates to track AI content.

Related Content

For more on this topic, check out: these

Listen to This Article